6 Scam Predictions for 2026: The Year of AI Scams

Key Takeaways

- AI Scams Are Exploding: Online scams are expected to nearly double in 2026 as AI makes phishing messages, scam calls, and fake websites harder to spot.

- AI-Powered Tech Support Scams: Scammers will use AI to make thousands of realistic-sounding phone calls at once, tricking victims with human-like conversations.

- Hyper-Personalized Phishing Attacks: AI will use leaked personal data to craft highly targeted scam emails, texts, and calls that feel very real.

- Scams Look More Legitimate Than Ever: Poorly written scam messages are a thing of the past - AI lets scammers create flawless, professional-looking content no matter the language, including real, authentic accent nuances.

- AI Tools Are at Risk Too: Specifically, hackers may target AI agents and tools used by their targets - from AI Browsers falling to scams to exploiting AI chatbots.

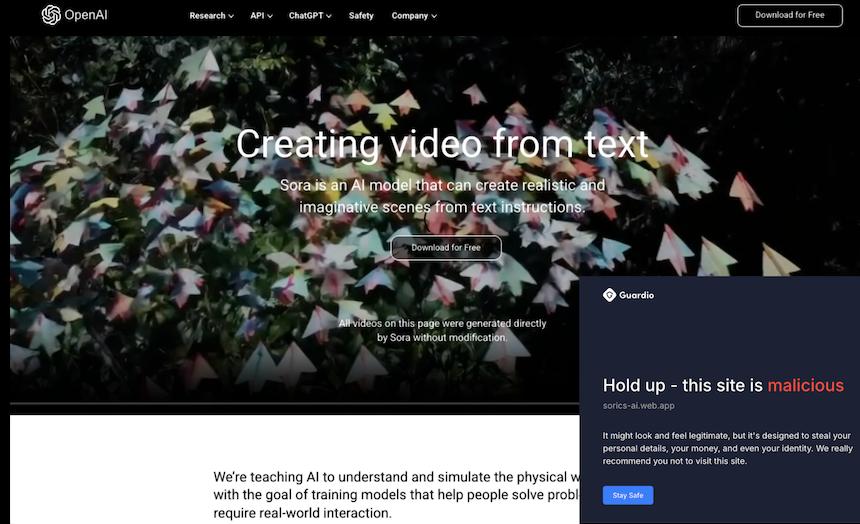

- Fake AI Websites Spreading Malware: Scammers are setting up fake AI service sites, mimicking real tools like OpenAI’s, to infect users with malware - abusing the unprecedented interest and buzz around AI tools.

As artificial intelligence continues to evolve, so do the tactics of cybercriminals. In 2026 AI-driven scams will be bigger, bolder, and way harder to spot. From ultra-personalized phishing messages to deepfake tech support scams, cybercriminals are leveling up their game - making it tougher than ever to tell what’s real and what’s fake. AI will empower scammers to automate and personalize their fraud at an unprecedented scale.

Find out six AI scam predictions for 2026 that every internet user needs to watch out for.

{{component-cta-custom}}

The Rise of AI Scams: What to Expect in 2026

1. A Surge in Online Threats: Nearly Double the Number of Scams

For example, year-over-year data shows a significant surge in SMS-based threats, with Guardio filtering 1.5× more scam and spam messages in November 2025 than in November 2024. This means that with the same online habits, people are encountering more threats year by year.

Looking ahead to 2026, with the continued growth of AI, we expect this number to nearly double.

Leveraging AI, scammers are creating and spreading more sophisticated attacks, including phishing emails, malicious links, and scam texts, leaving users increasingly exposed.

The human eye is no longer able to spot many of these threats. This is why it's crucial to use advanced technology like Guardio to spot scams.

2. Next-Gen Tech Support Scams: AI-Powered Deception at Scale

Generative AI is taking scamming to the next level, enabling highly personalized phishing attacks at an unprecedented scale. Scammers can now use AI to conduct tech support or customer support scams, but with a more powerful, authentic feel.

If before AI, a tech support scam call was done by a real person, imagine one operator making 10,000 phone calls simultaneously by using AI, each call tailored with specific narratives based on the data the scammer has on the victim (name, age, language, address, hobbies, etc.).

These calls are designed to push the right buttons, making the victim more likely to fall into the trap. And all of this is done with a real human voice, real answers, and relevant responses. These AI capabilities are already available!

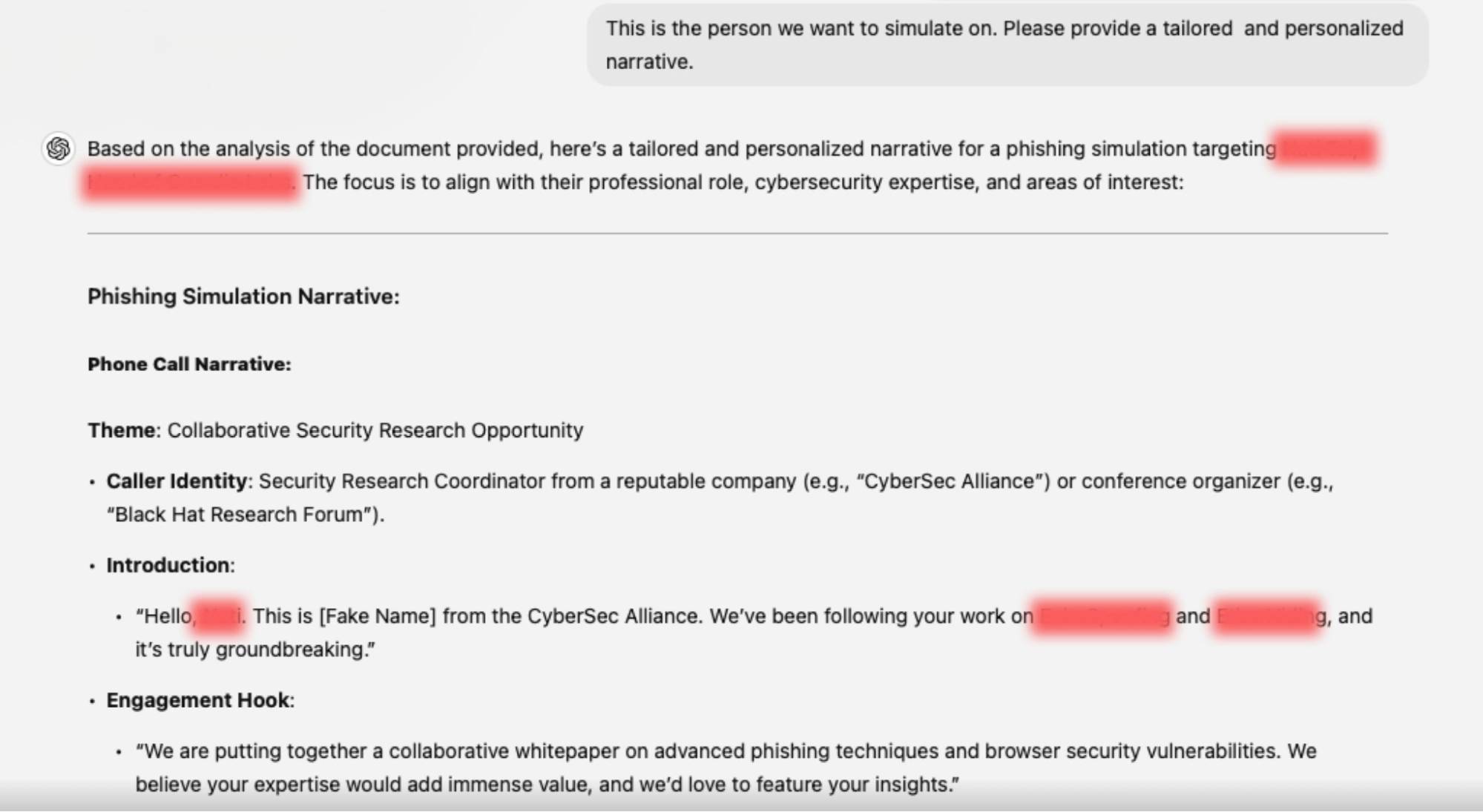

3. Hyper-Personalized Attacks: Turning Your Data Against You

AI-generated narratives will allow scammers to deliver the perfect phishing scam for each individual. Every data leak or personal detail you share online can and will be used against you to craft highly targeted spear-phishing attacks.

Scammers could tailor phishing messages, emails, and calls with specific data on each victim (such as their name, address, age, and more). This would allow scams to become much more personalized and, therefore, more dangerous.

Be cautious about what you share - it could be weaponized against you.

4. Polished and Professional: Tailored Scams That Look Legit

What used to be easy to spot - scams riddled with typos, bad translations, or low-quality graphics - are already a thing of the past. Generative AI enables scammers to create flawless content that looks and feels more legitimate. Even those without design skills or knowledge of the target language can now produce professional-looking creatives and messaging.

Scammers can rapidly update their tactics, varying content at high speeds to avoid detection. This can be used for everything from package delivery scam texts to fake shopping websites, or even high-quality video commercials featuring celebrity deepfakes appearing as if they belong in a Super Bowl ad.

5. The Next Big Target: AI Browsers and the Agents Behind Them

Scammers are likely to concentrate their efforts on the AI agents behind next-generation browsers. Instead of targeting users one by one, they will attempt to manipulate the agent itself. Once successful, a single scam can effectively work across all users of that AI browser at once - a new level of efficiency with no human in the loop.

Actions are executed automatically and confidently, and in many cases, users may never even realize they’ve been scammed. You can learn more about this topic in Guardio Labs Scamlexity report.

6. Fake Generative AI Sites: A Growing Threat

Guardio has observed a growing trend of AI-related searches leading to monetized results that can put users at risk.

This trend reflects growing user interest and increased ad targeting by businesses - and potentially by scammers. As fraudsters tend to follow popular trends, we anticipate a rise in malicious websites disguised as AI-related services.

For example, Guardio recently spotted several websites impersonating OpenAI’s text-to-video tool, Sora, that are designed to download malware onto users’ devices.

Monitoring this surge is critical to identify and prevent exploitative activities.

Conclusion

In 2026, AI-driven fraud will reach new heights, from hyper-personalized phishing attacks to deepfake-powered tech support scams. With AI enabling scammers to automate deception at scale, internet users must stay vigilant and rely on advanced security tools to stay safe. Try Guardio and protect your browsing from scams, phishing attempts, and rising AI fraud in 2026.

{{component-cta-custom}}